The Internet of Things (IoT) is becoming more critical for several reasons. First, IoT technology can improve the efficiency of numerous processes by providing real-time monitoring, analytics, and automation. The enhanced efficiency can lead to cost savings, boosted productivity, and reduced waste. Second, IoT enables seamless connectivity between devices, systems, and people. This connectivity can enable new forms of collaboration, innovation, and decision-making and improve the customer experience. Third, IoT generates vast amounts of data, which can be analyzed using advanced analytics techniques to gain insights into trends, patterns, and anomalies. Therefore, organizations can make data-driven decisions and improve their operations. Fourth, IoT can improve safety in various domains, such as manufacturing, healthcare, and transportation, by providing real-time monitoring, predictive analytics, and automation. Fifth, IoT can play a crucial role in achieving sustainable development goals, such as reducing greenhouse gas emissions, conserving natural resources, and improving social and economic well-being.

Research Areas

Networking Protocols

— Networking Technologies; Standards and Protocols

— Energy Efficiency; Bandwidth and Latency

— Machine Learning Methods for Enhancing Communication Efficiency

Network Security

— Security Protocols and Architectures for Residential, Campus, and Enterprise Networks

— Leveraging AI for Enforcing Network Security

— Device Vulnerability; Data Privacy

Development of Edge to Cloud Systems

— Data Management and Analytics

— Network Infrastructure

— Interoperability

Edge Computing and Distributed AI

— Design and development of Edge Computing and Distributed AI Methods

— Communication and Computational Resources Allocation on Edge and Fog Computing Systems

Embedded Systems

— Design and Interfacing of Embedded Systems

— Energy Modeling, Measurement, and Management

Evaluation and Modeling

— Empirical, Simulation-based, and Analytical Performance Evaluation of IoT Systems

Join the Team

Please email your CV to Dr. Behnam Dezfouli:

bdezfouli [at] scu [dot] edu

SIOTLAB's GitHub

Click Here!

Sample Projects

Communication Protocols for Internet of Things Systems

Communication protocols play a major role in terms of the reliability, energy efficiency, and timeliness of IoT systems. We address these requirements by developing novel protocols across the communication stack.

Tags: WiFi 6 (802.11ax); IoT Traffic; Throughput; Traffic Characterization; TWT; Scheduling; Network Telemetry

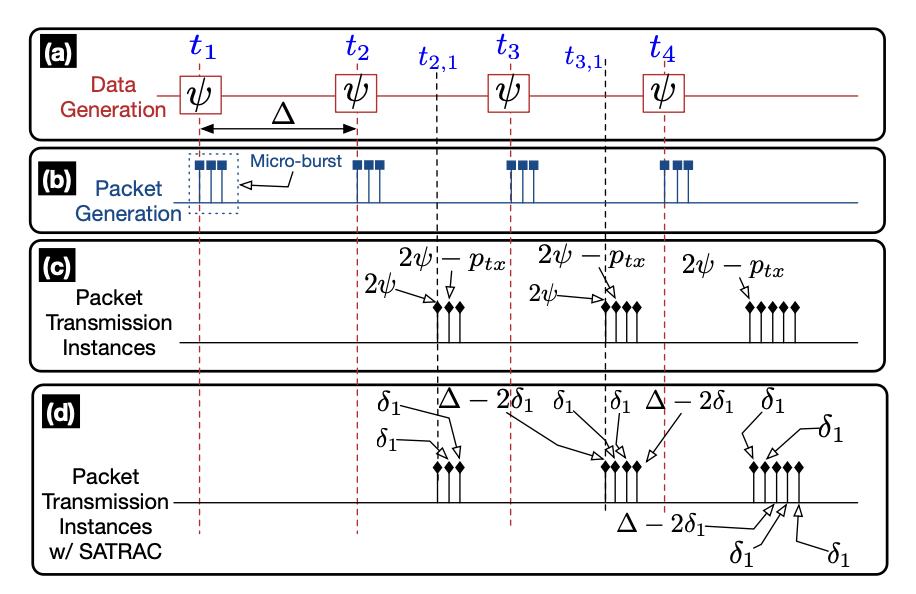

Traffic Characterization for Efficient TWT Scheduling in 802.11ax IoT Networks

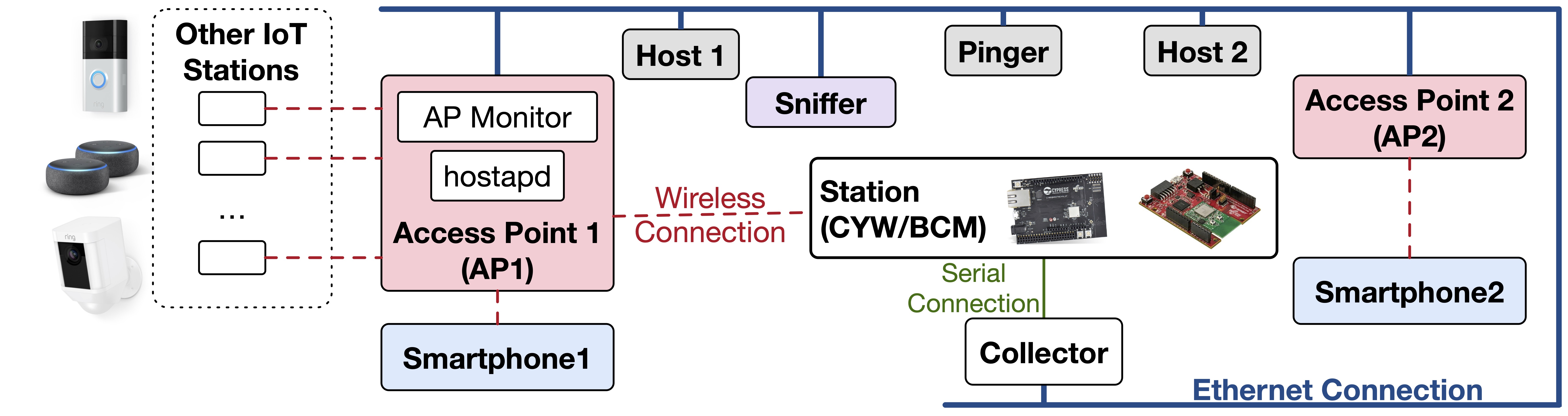

To reduce packet collisions and enhance the energy efficiency of stations, Target Wake Time (TWT), which is a feature of the 802.11ax standard (WiFi 6), allows the allocation of communication service periods to stations. While effective TWT allocation requires characterizing the traffic pattern of stations, in this paper, we empirically study and reveal that the existing methods (i.e., channel utilization estimation, packet sniffing, and buffer status report) do not provide adequate accuracy. To remedy this problem, we propose a traffic characterization method that can accurately capture inter-packet and inter-burst intervals on a per-flow basis in the presence of factors such as channel access and packet preparation delay. We empirically evaluate the proposed method and confirm its superior traffic characterization performance against the existing ones. We also present a sample TWT allocation scenario that leverages the proposed method to enhance throughput.

Tags: WiFi (802.11); IoT; Kernel modules; eBPF; Observability; Energy Monitoring

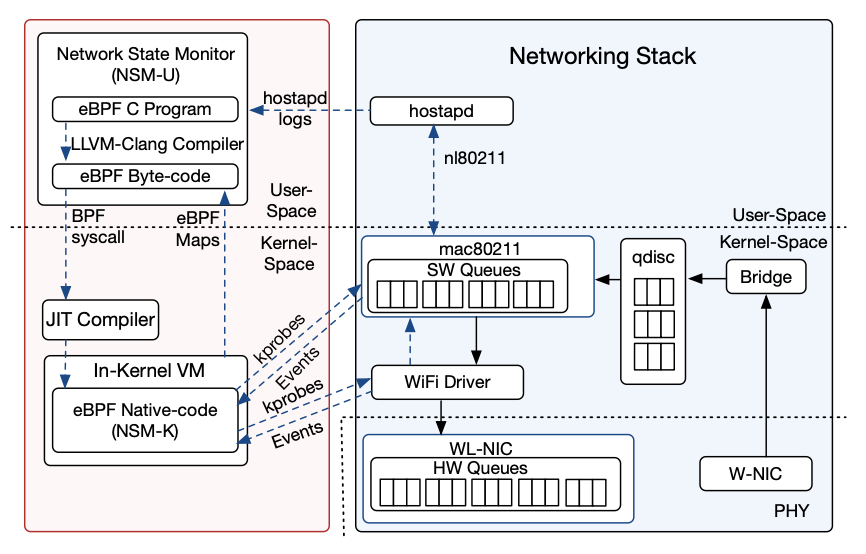

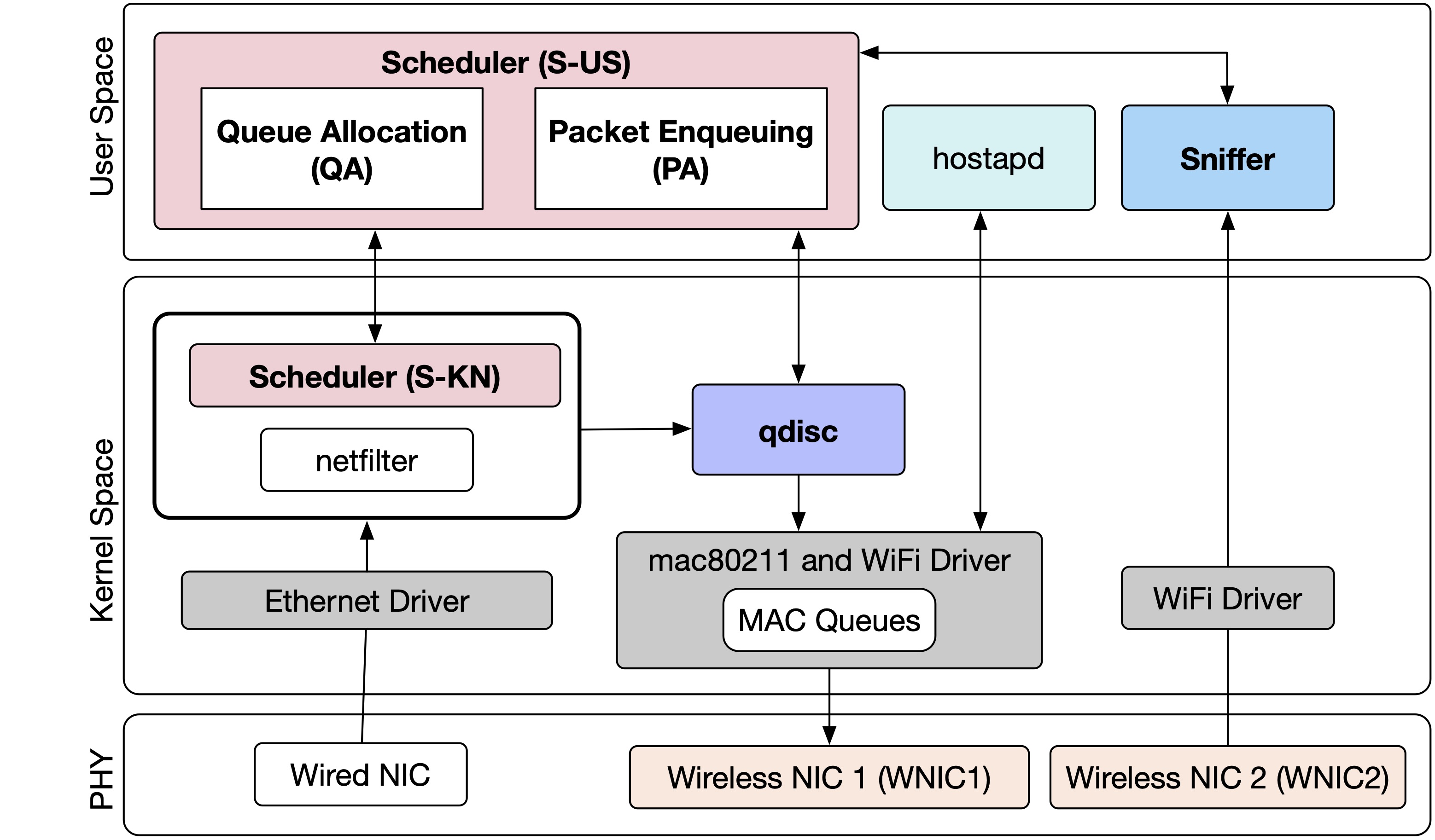

FLIP: A Framework for Leveraging eBPF to Augment WiFi Access Points and Investigate Network Performance

Monitoring WiFi networks is essential to gain insight into the network operation and develop methods capable of reacting to network dynamics. However, research and development in this field are hindered because there is a lack of a framework that can be easily extended to collect various types of monitoring data from the WiFi stack. In this paper, we propose FLIP, a framework for leveraging eBPF to augment WiFi access points and investigate the performance of WiFi networks. Using this framework, we focus on two important aspects of monitoring the WiFi stack. First, considering the high delay experienced by packets at access points, we show how switching packets from the wired interface to the wireless interface can be monitored and timestamped accurately at each step. We build a testbed using FLIP access points and investigate the factors affecting packet delay experienced in access points. Second, we present a novel approach that allows access points to track the duty-cycling pattern and energy consumption of their associated stations accurately and without the need for any external energy measurement tools. We validate the high energy measurement accuracy of FLIP by empirical experiments and comparisons against a commercial tool.

Tags: WiFi (802.11); IoT; Delay Prediction; Machine Learning; Traffic Characterization; Latency

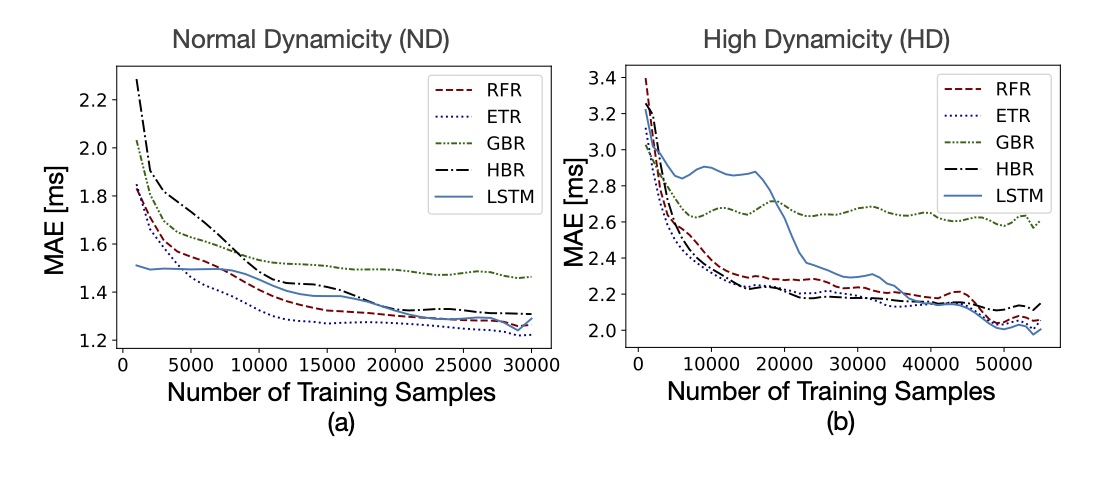

EAPS: Edge-Assisted Predictive Sleep Scheduling for 802.11 IoT Stations

The main reasons justifying the importance of WiFi for IoT connectivity are: (1) compared to indoor cellular gateways, WiFi Access Points (APs) are more popular and broadly deployed, (2) compared to cellular communication, WiFi reduces deployment cost, allows for local communication, and enables users and enterprises to enforce a higher level of control over their network, (3) compared to Bluetooth Low Energy (BLE) (275-300 nJ/bit), WiFi provides a lower (10-100 nJ/bit) physical layer energy consumption, and (4) compared to 802.15.4, WiFi provides 100-10000x higher data rates.

When used in IoT applications, Power Save Mode (PSM) increases latency and intensifies channel access contention after each beacon instance, and Adaptive Power Save Delivery (APSD) does not inform stations about when they need to wake up to receive their downlink packets.

In this project, we present a data-driven method to predict the communication delay between AP and IoT stations. With this method, IoT stations can switch back to sleep mode and wake up only when the AP has some data packets ready to be delivered to them. We have fully implemented this method in a Linux AP and evaluate system performance in multiple real-world environments.

Tags: Ultra-High-Rate Sampling; Industrial Control; Medical Monitoring; WiFi (802.11); IoT; RTOS; Encoding; Packet Processing; Time Synchronization; ADC

Sensifi: A Wireless Sensing System for Ultra-High-Rate Applications

Wireless Sensor Networks (WSNs) are being used in various applications such as structural health monitoring and industrial control. Since energy efficiency is one of the major design factors, the existing WSNs primarily rely on low-power, low-rate wireless technologies such as 802.15.4 and Bluetooth.

In this work, by proposing , we strive to tackle the challenges of developing ultra-high-rate WSNs based on the 802.11 (WiFi) standard. As an illustrative structural health monitoring application, we consider spacecraft vibration test and identify system design requirements and challenges.

Our main contributions are as follows. First, we propose packet encoding methods to reduce the overhead of assigning accurate timestamps to samples. Second, we propose energy efficiency methods to enhance the system’s lifetime. Third, to enhance sampling rate and mitigate sampling rate instability, we reduce the overhead of processing outgoing packets through the network stack. Fourth, we study and reduce the delay of processing time synchronization packets through the network stack. Fifth, we propose a low-power node design particularly targeting vibration monitoring. Sixth, we use our node design to empirically evaluate energy efficiency, sampling rate, and data rate. We leave large-scale evaluations as future work.

Tags: WiFi (802.11); IoT; Delay; Energy; Packet Scheduling; Simulation; Testbed

Enhancing the Energy-Efficiency and Timeliness of IoT Communication in WiFi Networks

Increasing the number of Internet of Things (IoT) stations or regular stations escalates downlink channel access contention and queuing delay, which in turn result in higher energy consumption and longer communication delays with IoT stations.

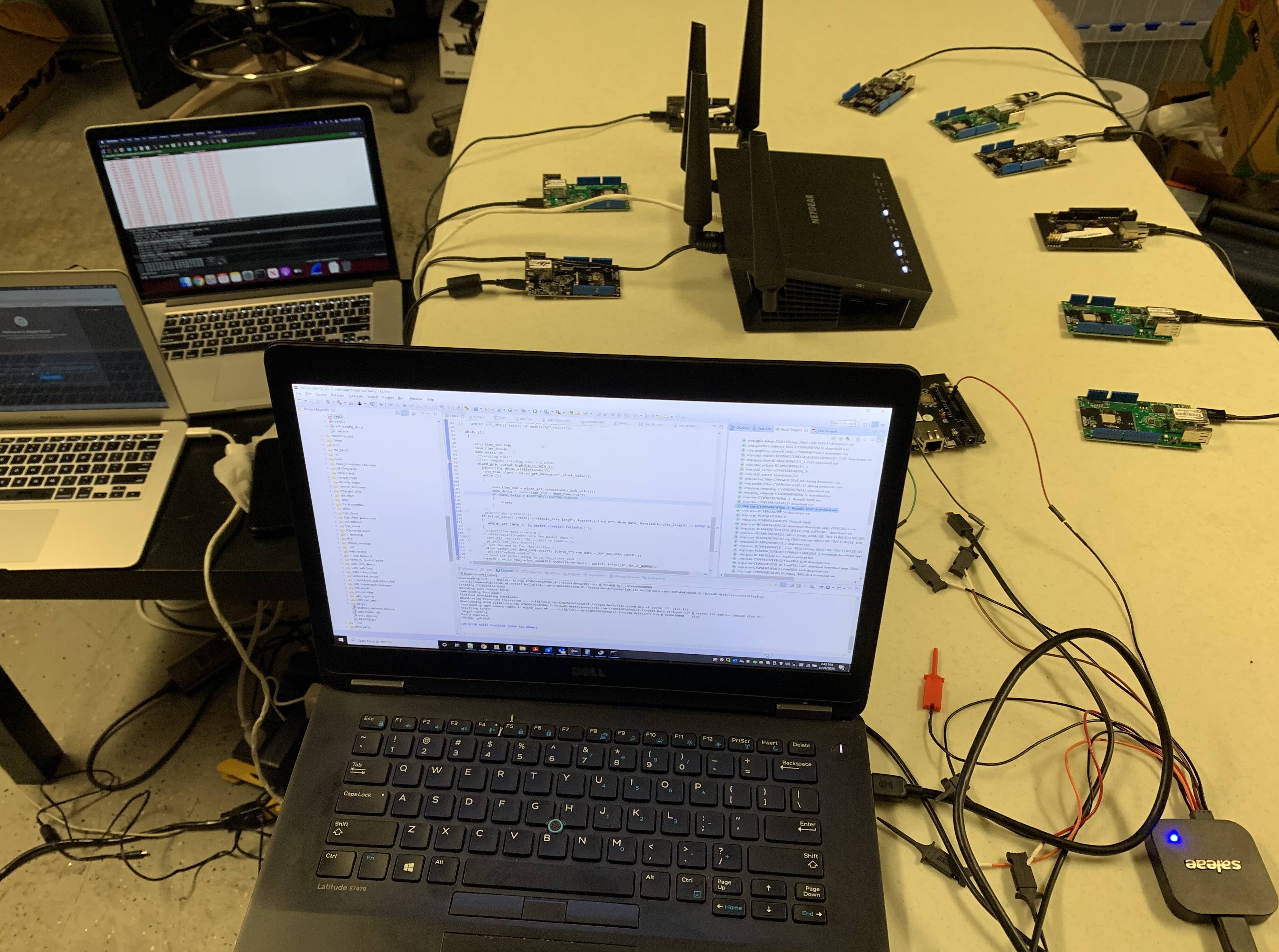

To remedy this problem, this work presents WiFi IoT access point (Wiotap), an enhanced WiFi access point (AP) that implements a downlink packet scheduling mechanism. In addition to assigning higher priority to IoT traffic compared to regular traffic, the scheduling algorithm computes per-packet priorities to arbitrate the contention between the transmission of IoT packets. This algorithm employs a least-laxity first (LLF) scheme that assigns priorities based on the remaining wake-up time of the destination stations.

Tags: WiFi (802.11); IoT; Association; Energy; Security

Empirical Study and Enhancement of Association and Long Sleep in 802.11 IoT Systems

The three essential operations performed to ensure connectivity in an 802.11 (WiFi) network are association, maintaining association, and periodic beacon reception. Understanding and enhancing the energy efficiency of these operations is essential for building IoT systems. Unfortunately, the overheads of these operations have not been studied considering station’s software and hardware configuration, access point configuration, and link unreliability.

In this project we build a testbed and show that: (i) association cost depends on multiple factors including probing, key generation, operating system, and network stack, (ii) increasing listen interval to reduce beacon reception wake-up instances may negatively impact energy efficiency, (iii) maintaining association by relying on the poll messages generated by the access point is not reliable, and (iv) key renewal aggravates the chance of disassociation.

Tags: WiFi; Monitoring; Programmability; Linux; Event; Polling; Testbed

MonFi: A Tool for High-Rate, Efficient, and Programmable Monitoring of WiFi Devices

The increasing number of WiFi devices, their stringent communication requirements, and the need for higher energy efficiency mandate the adoption of novel methods that rely on monitoring the WiFi communication stack to analyze, enhance communication efficiency, and secure these networks. In this project, we propose MonFi, a publicly available, open-source tool for high-rate, efficient, and programmable monitoring of the WiFi communication stack. With this tool, regular user-space applications can specify their required measurement parameters, monitoring rate, and measurement collection method as event-based, polling-based, or a hybrid of both.

We also propose methods to ensure a deterministic sampling rate regardless of the processor load caused by other processes such as packet switching. In terms of sampling rate and processing efficiency, we show that MonFi outperforms the Linux tools used to monitor the communication stack.

Tags: IoT; Transport Layer; TCP; UDP; QUIC; MQTT; Overhead; Testbed

Tags: WiFi; Mesh; Control; Programmability

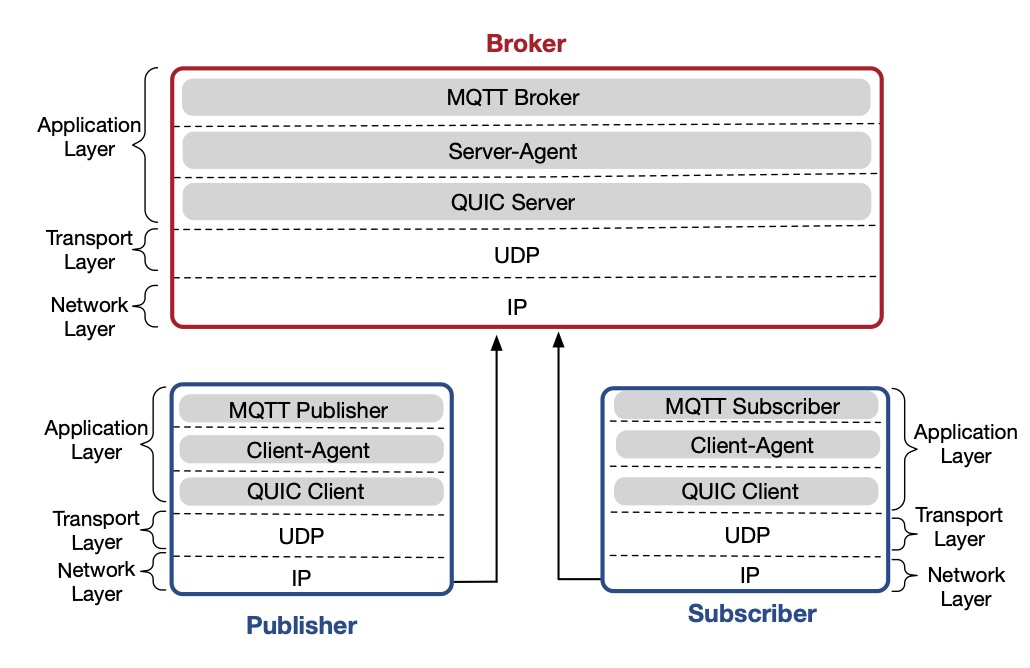

Implementation and Analysis of QUIC for MQTT

The existing transport and security protocols – namely TCP/TLS and UDP/DTLS – fall short in terms of connection overhead, latency, and connection migration when used in IoT applications.

In this project, after studying the root causes of these shortcomings, we show how utilizing QUIC in IoT scenarios results in higher performance. Based on these observations, and given the popularity of MQTT as an IoT application layer protocol, we integrate MQTT with QUIC. By presenting the main APIs and functions developed, we explain how connection establishment and message exchange functionalities work. We evaluate the performance of MQTTw/QUIC versus MQTTw/TCP using wired, wireless, and long-distance testbeds.

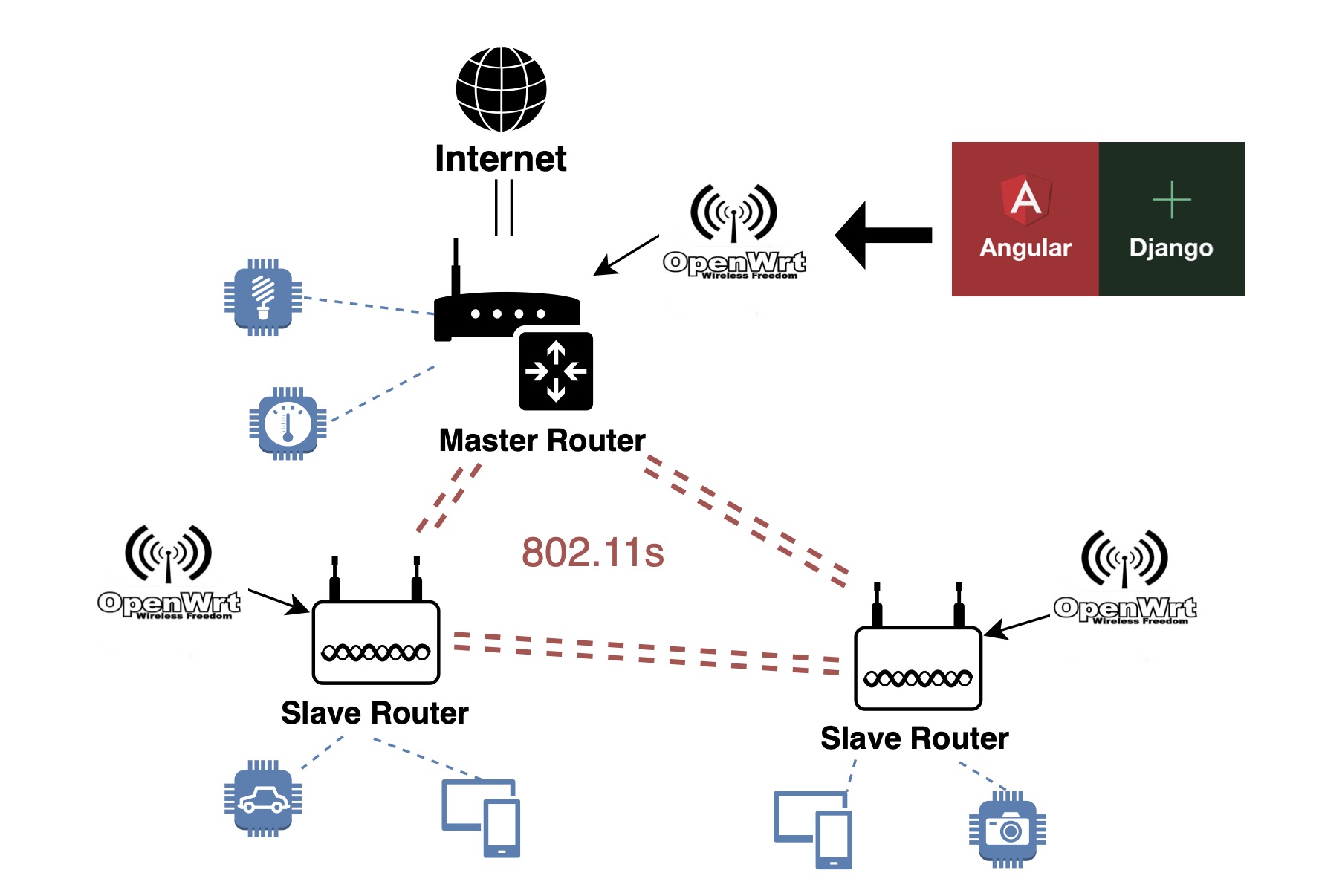

CentriFi: A Centralized Wireless Access Point Management Platform

With the ubiquity of wireless end-devices, more strain is placed on standard network deployment architectures. Mesh networks have started to rise in popularity in order to meet the needs of modern wireless networks. However, the existent solutions for deploying and centrally configuring mesh networks leave much to be desired, as most are either too expensive or too cumbersome.

This work showcases a solution to this problem, CentriFi—an open source platform, built to run on OpenWrt access points, providing a quick and easy way to set up and configure mesh networks in a central location using the 802.11s standard. CentriFi provides a web-based front-end for configuring the most crucial settings. Further, the system allows for greater expandability by providing a platform in which other configuration feature can be added by the open-source community in the future.

Tags: Wireless; Mobile; Real-time; Scheduling; Simulation

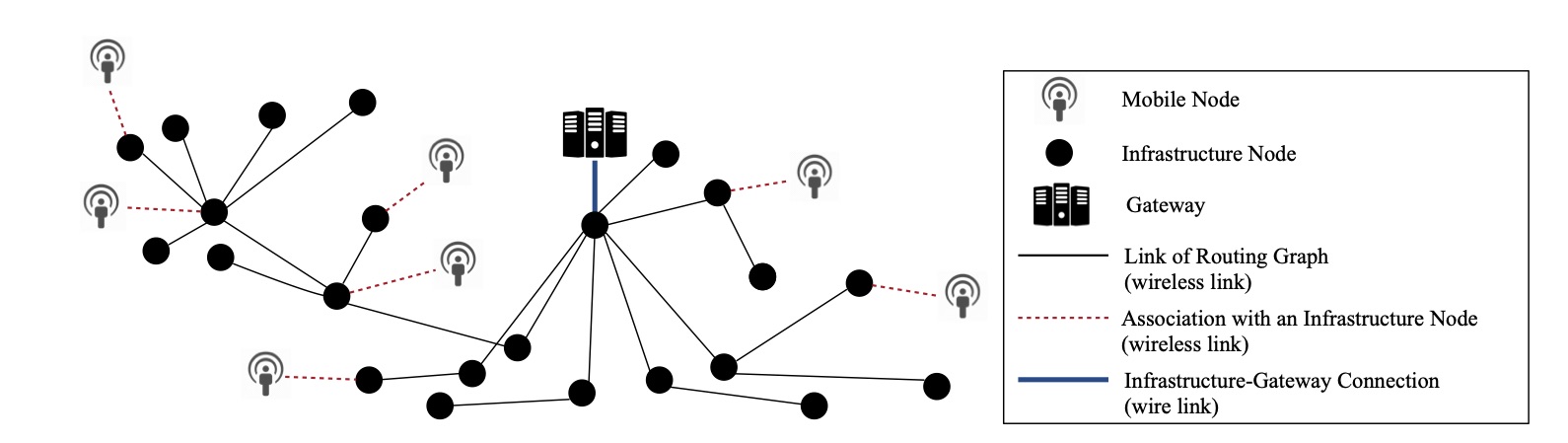

REWIMO: A Real-Time and Reliable Low-Power Wireless Mobile Network

Industrial applications and cyber-physical systems rely on real-time wireless networks to deliver data in a timely and reliable manner. However, existing solutions provide these guarantees only for stationary nodes.

This project present REWIMO, a solution for real-time and reliable communications in mobile networks. REWIMO has a two-tier architecture composed of (i) infrastructure nodes and (ii) mobile nodes that associate with infrastructure nodes as they move. REWIMO employs an on-join bandwidth reservation approach and benefits from a set of techniques to efficiently reserve bandwidth for each mobile node at the time of its admission and over its potential communication paths. To ensure association of mobile nodes with infrastructure nodes over high-quality links, REWIMO uses the two-phase scheduling technique to coordinate neighbor discovery with data transmission. To mitigate the overhead of handling network dynamics, REWIMO employs an additive scheduling algorithm, which is capable of additive bandwidth reservation without modifying existing schedules.

Aditional Publication

Low-Power Wireless for the Internet of Things: Standards and Applications Predictive Interference Management for Wireless Channels in the Internet of ThingsA Quantitative Study of DDoS and E-DDoS Attacks on WiFi Smart Home DevicesA Comprehensive Experimental Evaluation of Radio Irregularity in BLE Networks

Edge, Fog and Cloud Computing

With the increase in the processing power of embedded systems, new process-intensive IoT applications are being introduced for tasks such as object classification. Edge and fog computing are essential to satisfy the stringent latency requirements of mission-critical applications, reduce network utilization, decrease the processing overhead of resource-constrained devices, enhance security, and ensure system operation in the presence of intermittent network connectivity.

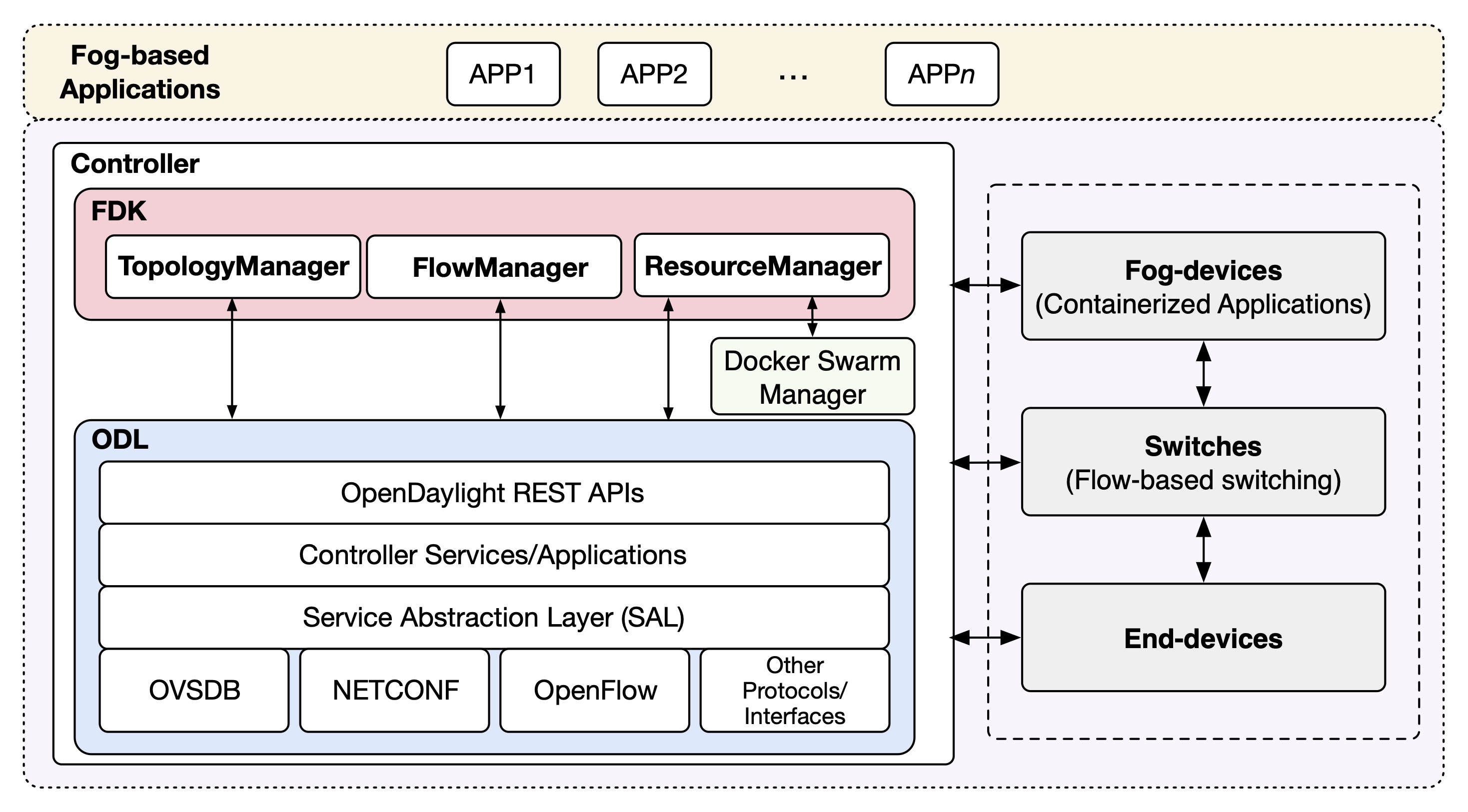

The Fog Development Kit: A Platform for the Development and Management of Fog Systems

Since fog computing is a relatively new field, there is no standard platform for research and development in a realistic environment, and this dramatically inhibits innovation and development of fog-based applications.

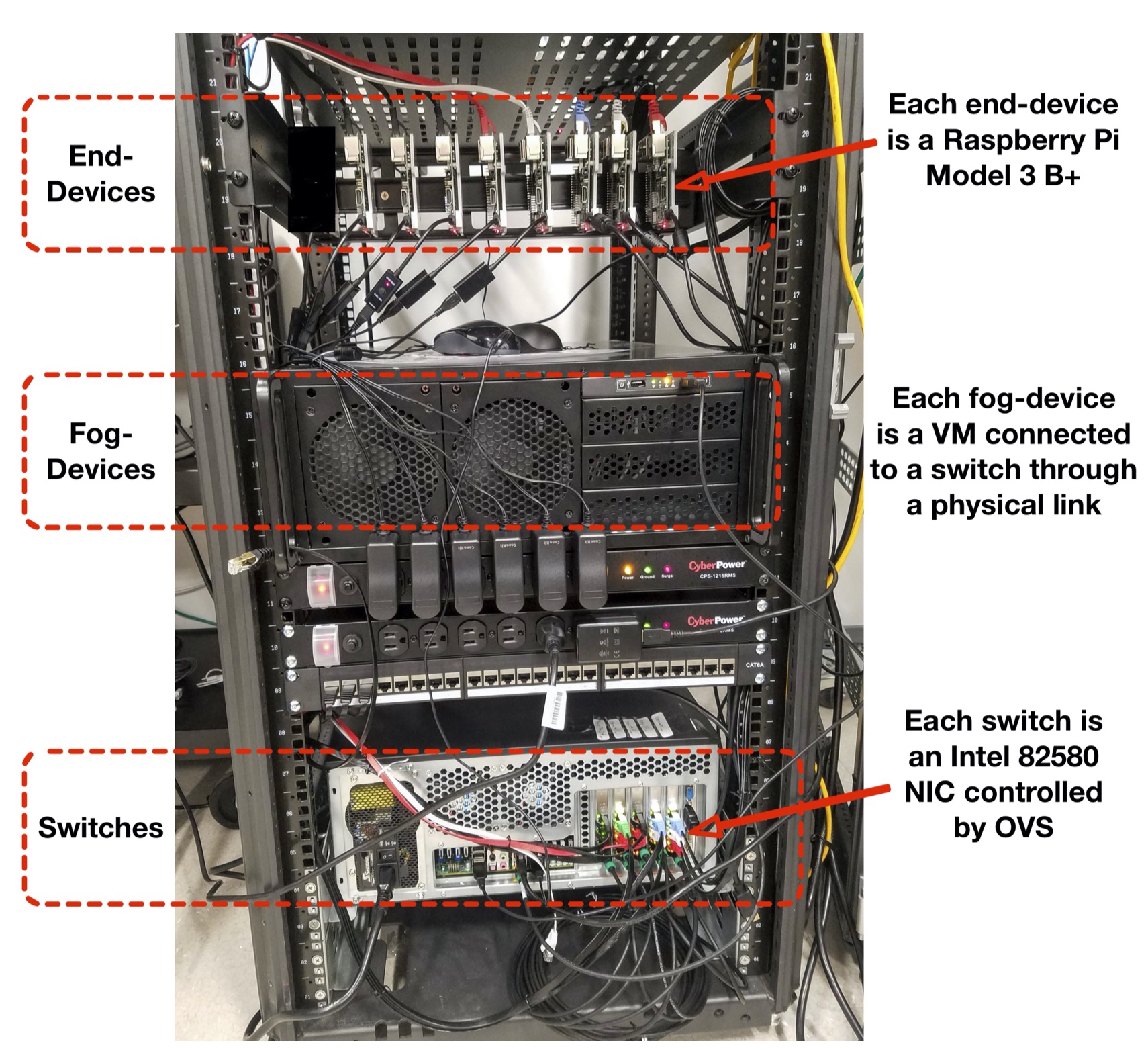

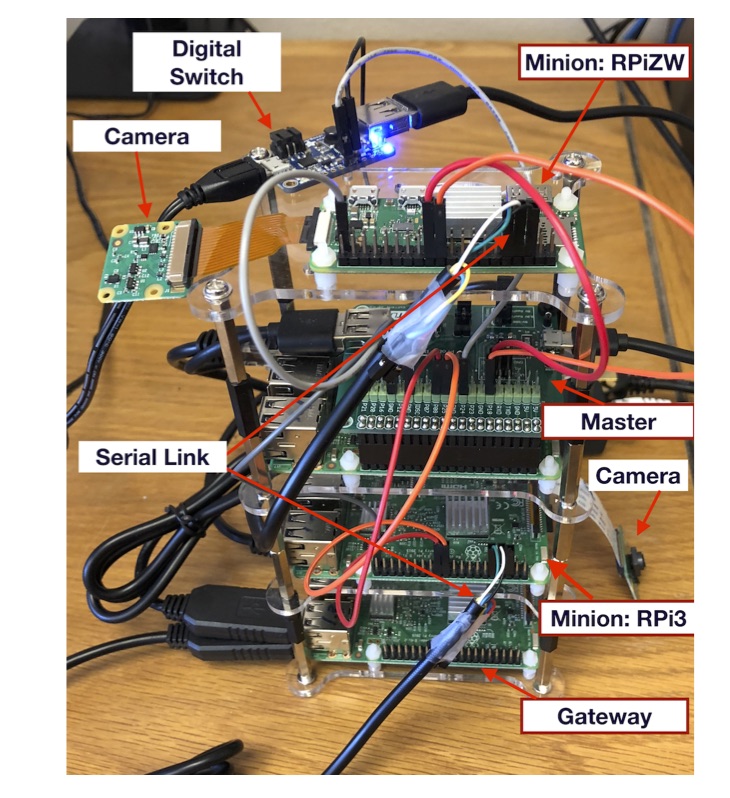

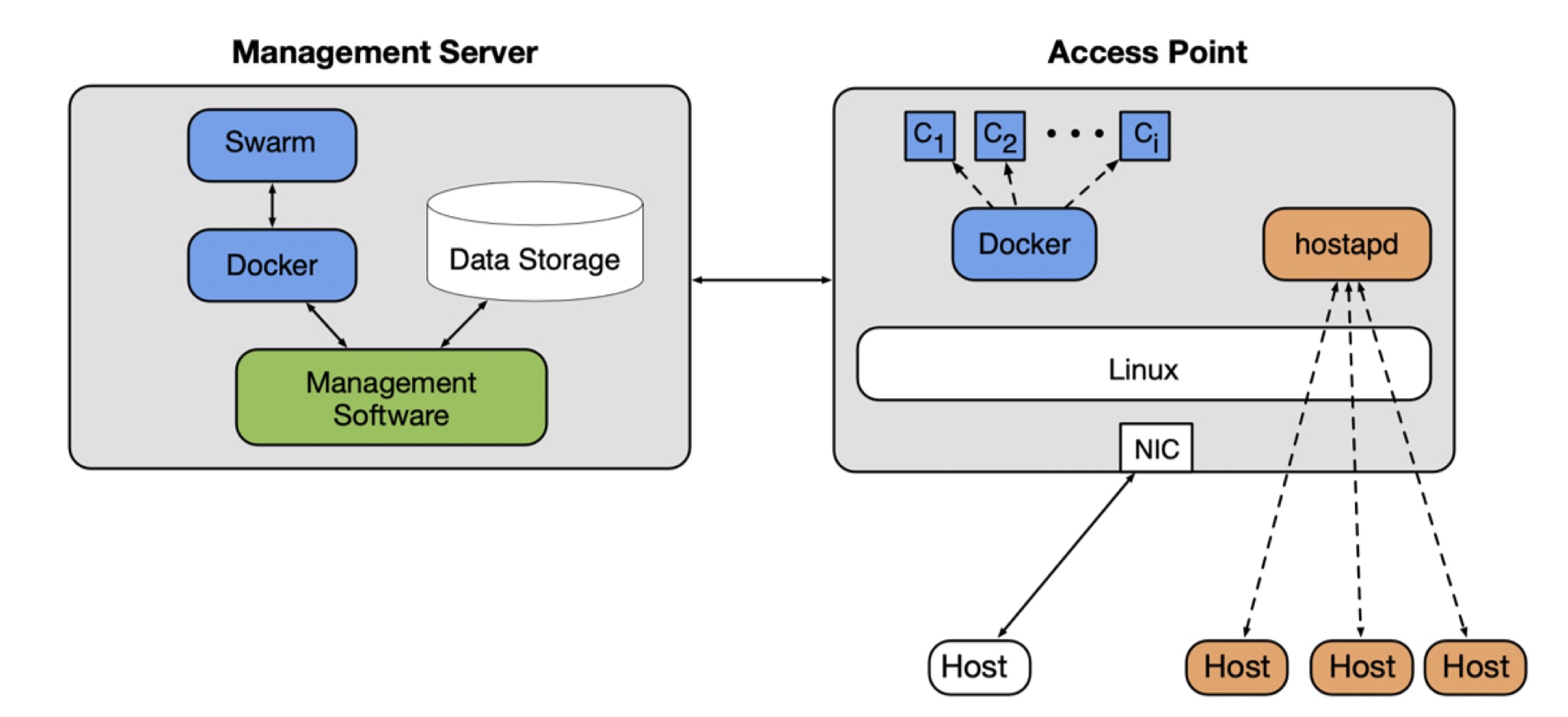

We propose the fog development kit (FDK). By providing high-level interfaces for allocating computing and networking resources, the FDK abstracts the complexities of fog computing from developers and enables the rapid development of fog systems. In addition to supporting application development on a physical deployment, the FDK supports the use of emulation tools (e.g., GNS3 and Mininet) to create realistic environments, allowing fog application prototypes to be built with zero additional costs and enabling seamless portability to a physical infrastructure. Using a physical testbed and various kinds of applications running on it, we verify the operation and study the performance of the FDK.

Tags: Edge Computing; Fog Computing; Resource Allocation; Container; SDN; OpenFlow; OVSDB; Testbed

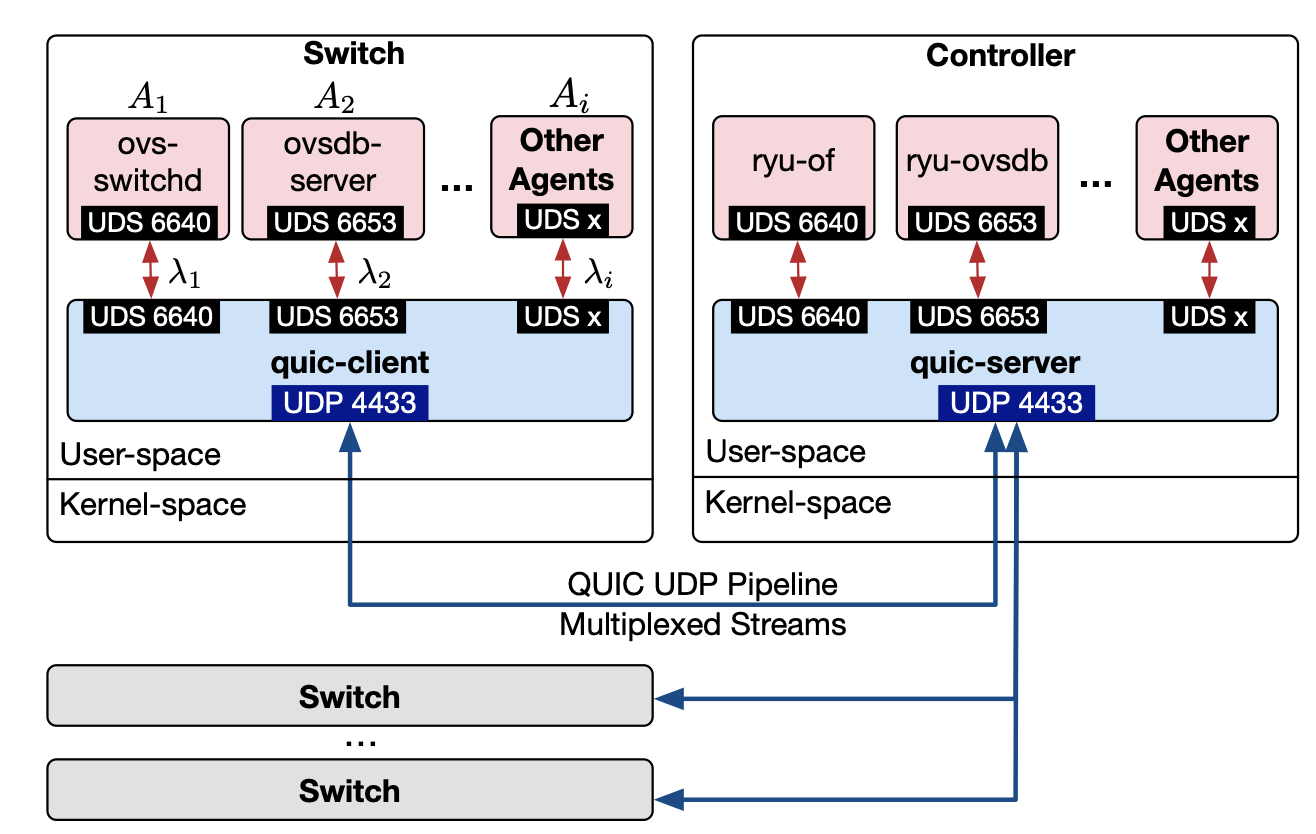

quicSDN: Transitioning from TCP to QUIC for Southbound Communication in SDNs

In Software-Defined Networks (SDNs), the control plane and data plane communicate for various purposes, such as applying configurations and collecting statistical data. While various methods have been proposed to reduce the overhead and enhance the scalability of SDNs, the impact of the transport layer protocol used for southbound communication has not been investigated. Existing SDNs rely on TCP (and TLS) to enforce reliability and security. In this paper, we show that the use of TCP imposes a considerable overhead on southbound communication, identify the causes of this overhead, and demonstrate how replacing TCP with QUIC can enhance the performance of this communication. We introduce the quicSDN architecture, enabling southbound communication in SDNs via the QUIC protocol. We present a reference architecture based on the standard, most widely used protocols by the SDN community and show how the controller and switch are revamped to facilitate this transition. We compare, both analytically and empirically, the performance of quicSDN versus the traditional SDN architecture and confirm the superior performance of quicSDN.

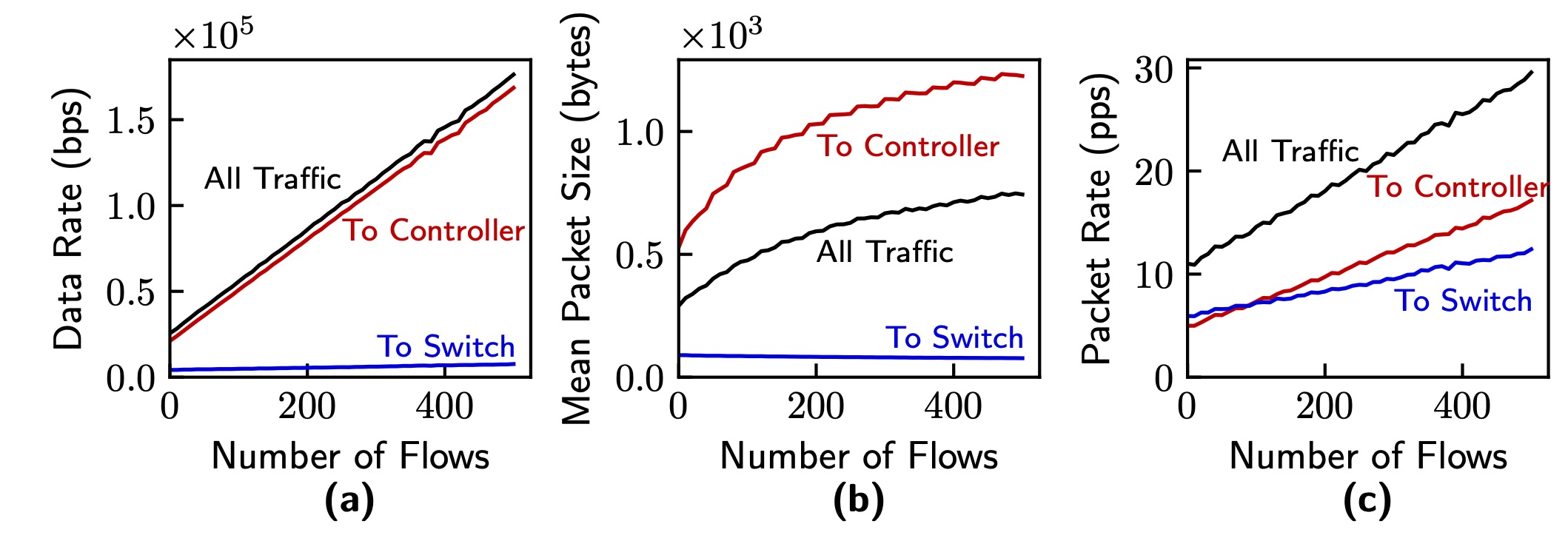

Modeling Control Traffic in Software-Defined Networks

The southbound control protocols used in Software Defined Networks (SDNs) allow for centralized control and management of the data plane. However, these protocols introduce additional traffic and delay between network controllers and switches. Despite the well understood capabilities of SDNs, current representations of control traffic overhead consist of approximations at best. In addition to high reactivity to incoming flows, the need for resource allocation and deterministic messaging delay necessitates a thorough understanding and modeling of the amount of control traffic and its effect on latency. In this work, we capture the network overhead of various switch configurations on a testbed and extract mathematical models to predict expected overhead for arbitrary switch configurations. We demonstrate that controller-switch traffic patterns are non-negligible and can be accurately modelled to compute the bandwidth utilization and latency of controller-switch communication.

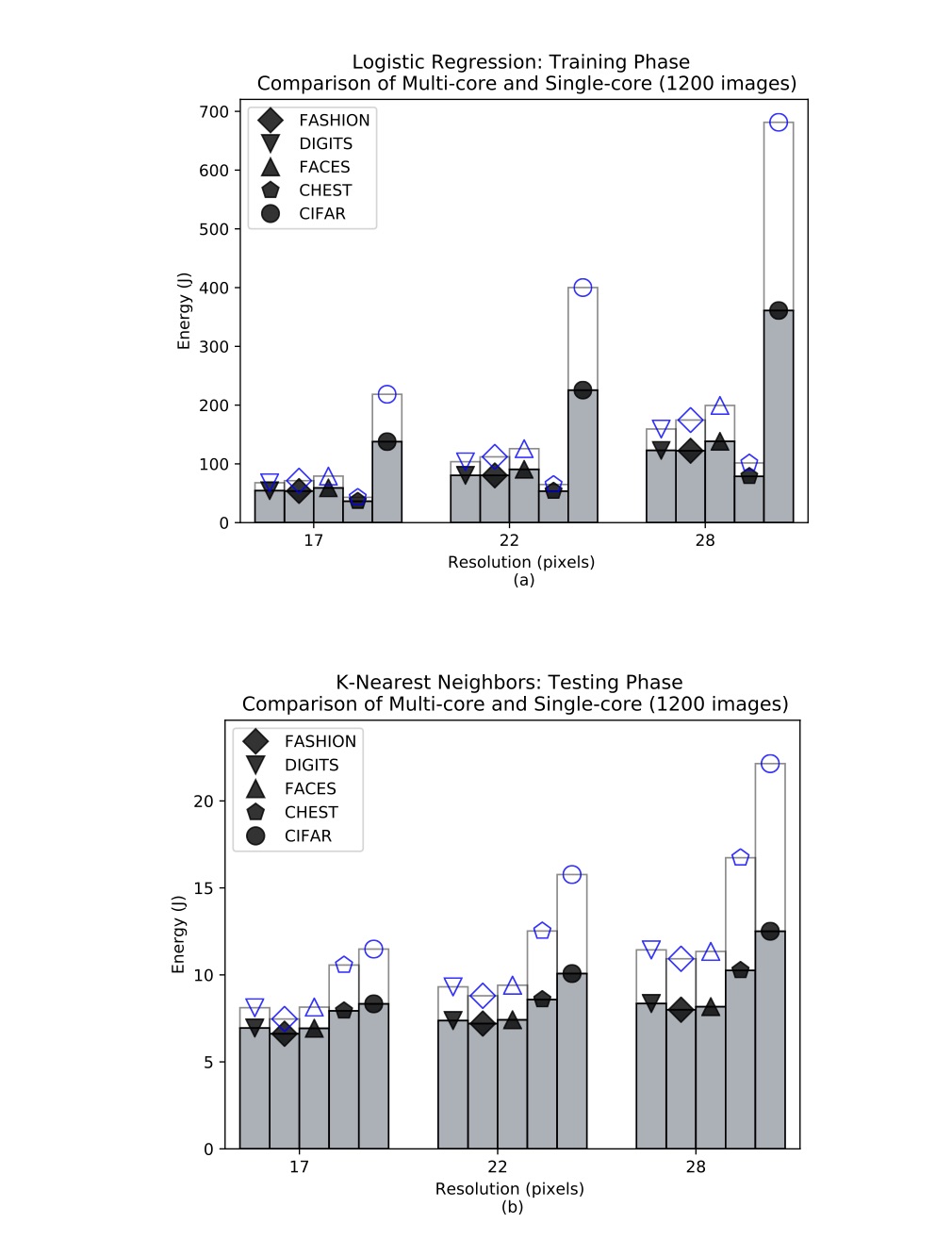

Image classification on IoT edge devices: profiling and modeling

With the advent of powerful, low-cost IoT systems, processing data closer to where the data originates, known as edge computing, has become an increasingly viable option. In addition to lowering the cost of networking infrastructures, edge computing reduces edge-cloud delay, which is essential for mission-critical applications.

We show the feasibility and study the performance of image classification using IoT devices. Specifically, we explore the relationships between various factors of image classification algorithms that may affect energy consumption, such as dataset size, image resolution, algorithm type, algorithm phase, and device hardware. In order to provide a means of predicting the energy consumption of an edge device performing image classification, we investigate the usage of three machine learning algorithms using the data generated from our experiments. The performance as well as the trade-offs for using linear regression, Gaussian process, and random forests are discussed and validated.

Tags: Edge Computing; Fog Computing; Image Classification; Energy; Machine Learning; Processor; Testbed

Profiling and Improving the Duty-Cycling Performance of Linux-based IoT Devices

Minimizing the energy consumption of Linux-based devices is an essential step towards their wide deployment in various IoT scenarios. Energy saving methods such as duty-cycling aim to address this constraint by limiting the amount of time the device is powered on. We study and improve the amount of time a Linux-based IoT device is powered on to accomplish its tasks. We analyze the processes of system boot up and shutdown on two platforms, the Raspberry Pi 3 and Raspberry Pi Zero Wireless, and enhance duty-cycling performance by identifying and disabling time-consuming or unnecessary units initialized in the userspace. We also study whether SD card speed and SD card capacity utilization affect boot up duration and energy consumption. In addition, we propose Pallex, a parallel execution framework built on top of the 'systemd init system' to run a user application concurrently with userspace initialization. We validate the performance impact of Pallex when applied to various IoT application scenarios: (i) capturing an image, (ii) capturing and encrypting an image, (iii) capturing and classifying an image using the the k-nearest neighbor algorithm, and (iv) capturing images and sending them to a cloud server.

Tags: Linux; Energy; Duty Cycle; Edge Computing; Fog Computing; Image Classification; Machine Learning; Processor; Testbed

EdgeAP: Enabling Edge Computing on Wireless Access Points

With the rise of the Internet of Things (IoT) leading to an explosion in the number of internet-connected devices, the current cloud computing paradigm is approaching its limits. Moving data back and forth between its origin and a far-away data center leads to issues regarding privacy, latency, and energy consumption. Edge computing, which instead processes data as close to its origin as possible, offers a promising solution to the pitfalls of cloud computing.

Our proof-of-concept edge computing platform, EdgeAP, is a programmable platform for the delivery of applica- tions on wireless access points. Use cases of the platform will be demonstrated via an example application. Addition- ally, the viability of edge computing on wireless access points will be thoroughly evaluated.

Tags: Edge Computing; Fog Computing; Image Classification; Machine Learning; Container; Testbed

Aditional Publication

Edge Mining on IoT Devices Using Anomaly DetectionSecuring Internet of Things Systems

Despite the significant increase of IoT devices, these devices show vulnerabilities to diverse security and privacy attacks, such as Distributed Denial of Service attacks (DDoS), energy-oriented DDoS attacks (E-DDoS), harvesting and forging data, blackmail/extortion, bitcoin mining, stalking, or robbery. The reasons are multi-fold. First, because of competition and revenue gain, many manufactures disregard the security aspects of IoT devices, which demands resources and skills—adding to the cost. Second, consumers are usually not well educated about the potential security issues caused by arbitrarily adding IoT devices into their home networks, not to mention the importance of keeping their IoT devices’ security features up-to-date. Third, smart home devices usually share a WiFi Access Point (AP) for Internet access and local interconnection.

Tags: WiFi; IoT; Security; Association; Energy; Testbed

A Quantitative Study of DDoS and E-DDoS Attacks on WiFi Smart Home Devices

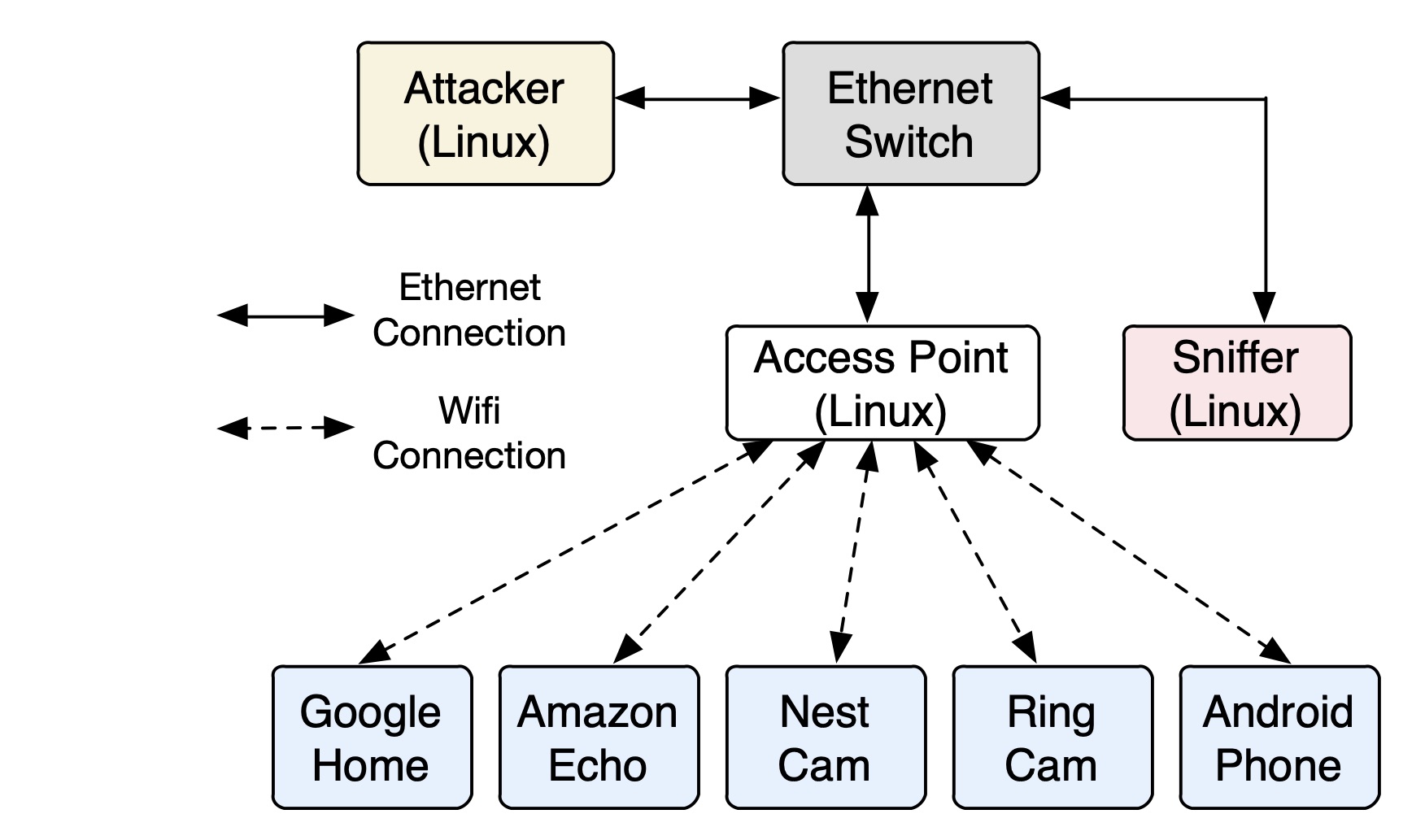

We quantify the impact of Distributed Denial of Service (DDoS) and energy-oriented DDoS attacks (E-DDoS) on WiFi smart home devices and explores the underlying reasons from the perspective of an attacker, victim device, and access point (AP). Compared to the existing work, which primarily focuses on DDoS attacks launched by compromised IoT devices against servers, our work focuses on the connectivity and energy consumption of IoT devices when under attack.

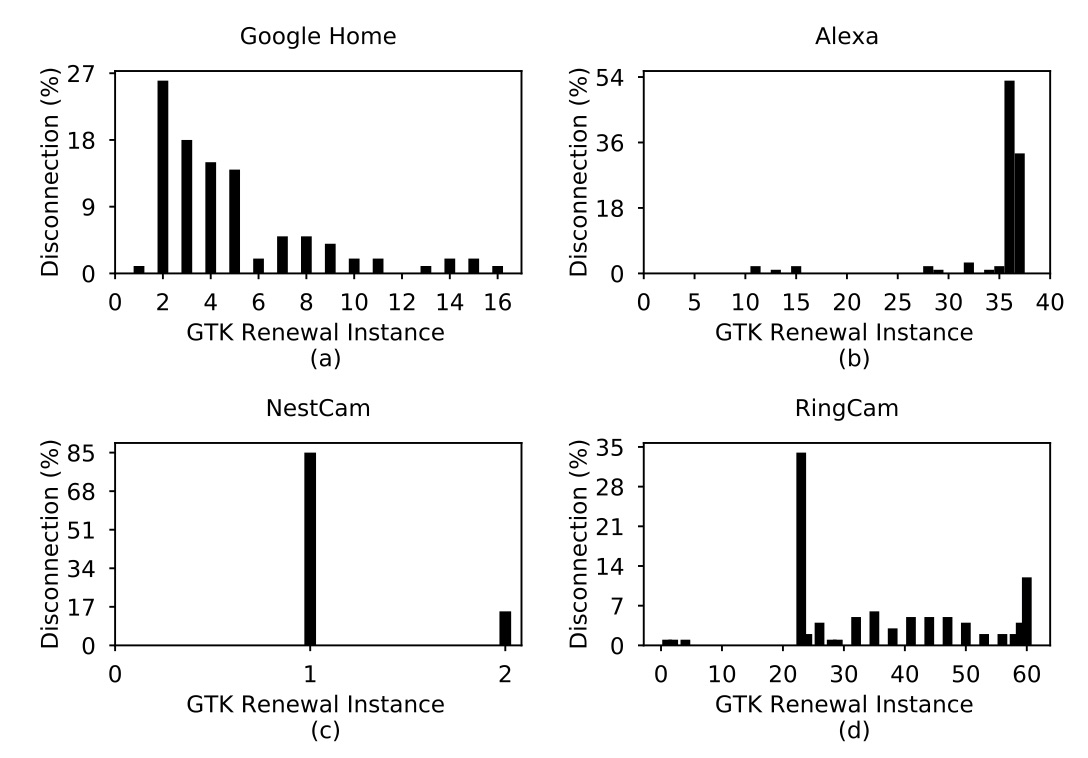

Our key findings are three-fold. First, the minimum DDoS attack rate causing service disruptions varies significantly among different IoT smart home devices, and buffer overflow within the victim device is validated as critical. Second, the group key updating process of WiFi, may facilitate DDoS attacks by causing faster victim disconnections. Third, a higher E-DDoS attack rate sent by the attacker may not necessarily lead to a victim’s higher energy consumption. Our study reveals the communication protocols, attack rates, payload sizes, and victim devices’ ports state as the vital factors to determine the energy consumption of victim devices. These findings facilitate a thorough understanding of IoT devices’ potential vulnerabilities within a smart home environment and pave solid foundations for future studies on defense solutions.

Tags: IoT; Security; Machine Learning; Device Classification

Securing Smart Homes via Software-Defined Networking and Low-Cost Traffic Classification

IoT devices have become popular targets for various network attacks due to their lack of industry-wide security standards. In this work, we focus on the classification of smart home IoT devices and defending them against Distributed Denial of Service (DDoS) attacks. The proposed framework protects smart homes by using VLAN-based network isolation. This architecture includes two VLANs: one with non-verified devices and the other with verified devices, both of which are managed by a SDN controller. Lightweight, stateless flow-based features, including ICMP, TCP and UDP protocol percentage, packet count and size, and IP diversity ratio, are proposed for efficient feature collection. Further analysis is performed to minimize training data to run on resource-constrained edge devices in smart home networks. Three popular machine learning models, including K-Nearest-Neighbors, Random Forest, and Support Vector Machines, are used to classify IoT devices and detect different DDoS attacks based on TCP-SYN, UDP, and ICMP. The system’s effectiveness and efficiency are evaluated by emulating a network consisting of an Open vSwitch, Faucet SDN controller, and flow traces of several IoT devices from two different testbeds. The proposed framework achieves an average accuracy of 97%in device classification and 98% in DDoS detection with average latency of 1.18 milliseconds.

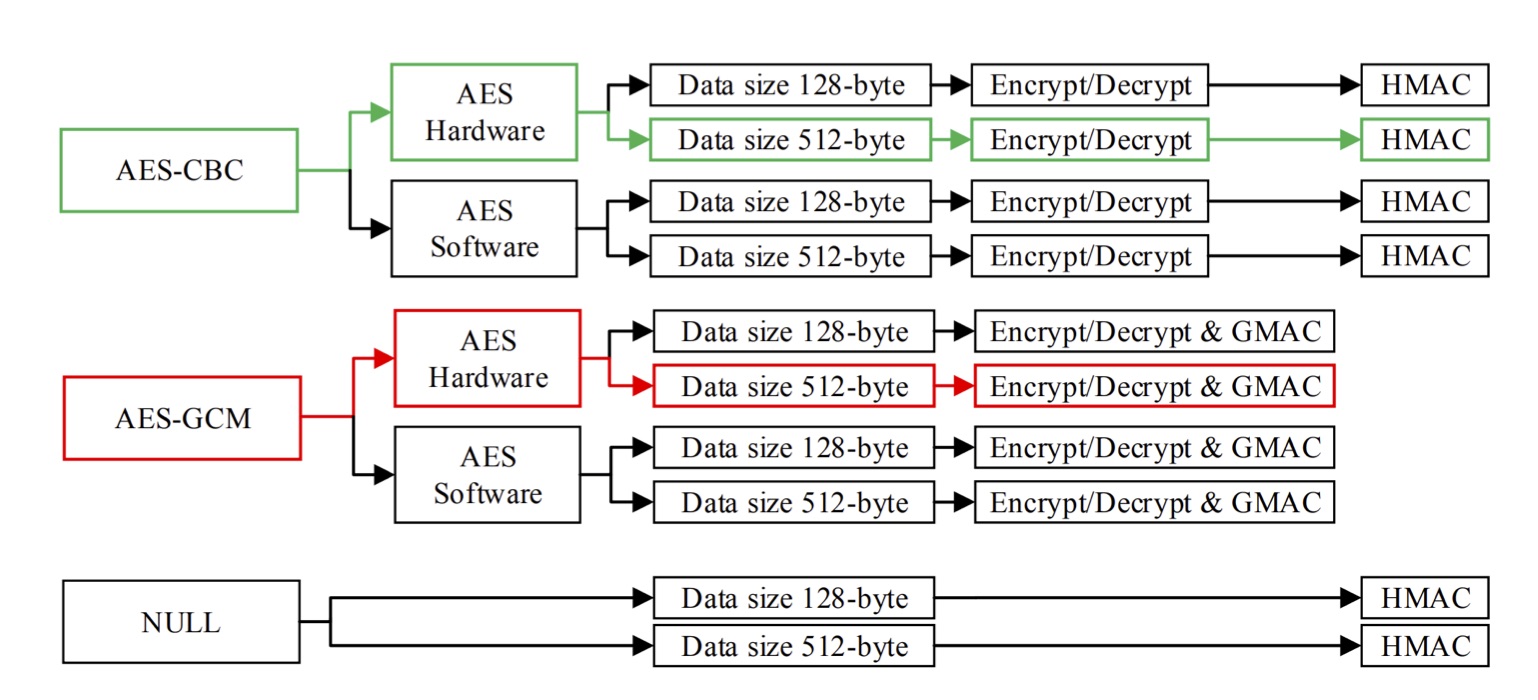

Tags: IoT; Security; TLS; DTLS; Energy; Testbed

A Comprehensive Empirical Analysis of TLS Handshake and Record Layer on IoT Platforms

The Transport Layer Security (TLS) protocol has been considered as a promising approach to secure Internet of Things (IoT) applications. The different cipher suites offered by the TLS protocol play an essential role in determining communication security level. Each cipher suite encompasses a set of cryptographic algorithms, which can vary in terms of their resource consumption and significantly influence the lifetime of IoT devices.

We present a comprehensive study of the widely used cryptographic algorithms by annotating their source codes and running empirical measurements on two state-of-the-art, low- power wireless IoT platforms. Specifically, we present fine-grained resource consumption of the building blocks of the handshake and record layer algorithms and formulate tree structures that present various possible combinations of ciphers as well as individual func- tions. Depending on the parameters, a path is selected and traversed to calculate the corresponding resource impact. Our studies enable IoT developers to change cipher suite parameters and observe the resource costs.

Developing Energy-Efficient and Reliable Sensing Systems

Design and development of low-power, reliable sensing systems requires application-oriented hardware design, tailored communication protocols, accurate power measurement, accurate sensor calibration, extensive performance evaluations.

Tags: IoT; Energy; Programmability; Range

Tags: Flood; Monitoring; Prevention; Wireless; Long Range; Security; Deployment

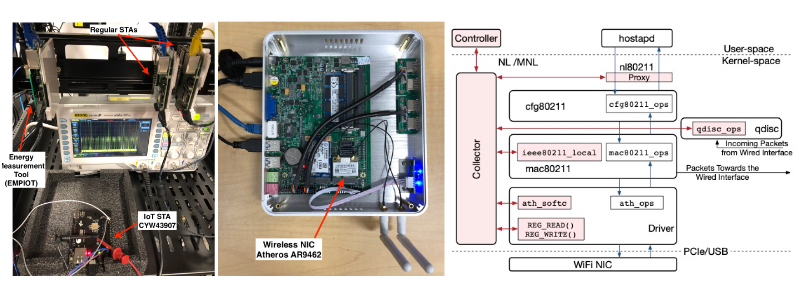

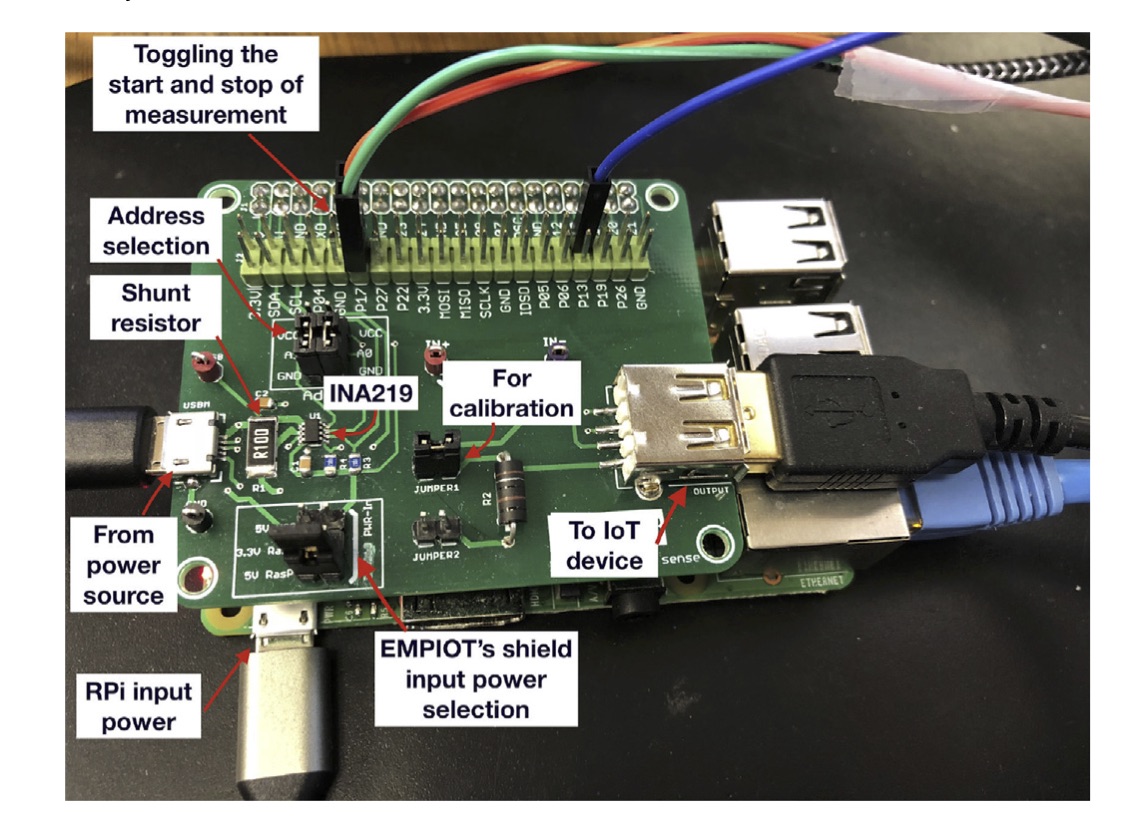

EMPIOT: An energy measurement platform for wireless IoT devices

Profiling and minimizing the energy consumption of resource-constrained devices is an essential step towards employing IoT in various application domains. Due to the large size and high cost of commercial energy measurement platforms, alternative solutions have been proposed by the research community. However, the three main shortcomings of existing tools are complexity, limited measurement range, and low accuracy. Specifically, these tools are not suitable for the energy measurement of new IoT devices such as those supporting the 802.11 technology.

We propose EMPIOT, an accurate, low-cost, easy to build, and flexible, power measurement platform. We present the hardware and software components of this platform and study the effect of various design parameters on accuracy and overhead. In particular, we analyze the effects of driver, bus speed, input voltage, and buffering mechanism on sampling rate, measurement accuracy and processing demand. These extensive experimental studies enable us to configure the system in order to achieve its highest performance. Using five different IoT devices performing four types of workloads, we evaluate the performance of EMPIOT against the ground truth obtained from a high-accuracy industrial-grade power measurement tool.

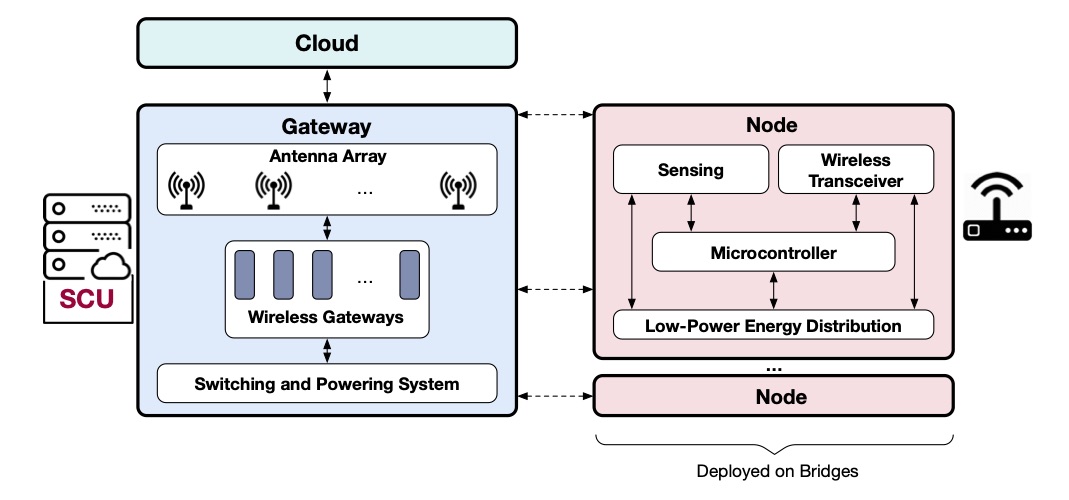

Flomosys: A Flood Monitoring System

Every year, there are significant and preventable financial losses, not to mention the safety hazards caused by floods. To warn people ahead of time, we can deploy low-power wireless sensor nodes to send readings across any terrain to a cloud platform, which can perform pattern analysis, prediction, and alert forwarding to anyone’s cellular device.

In this project, we propose Flomosys, a low-cost, low-power, secure, scalable, reliable, and extensible IoT system for monitoring creek and river water levels. Although there are multiple competing solutions to help mitigate this problem, Flomosys fills a niche not covered by existing solutions. Flomosys can be built inexpensively with off-the-shelf components and scales across vast territories at a low cost per sensor node. We present the design and implementation of this system as well as real-world test results.

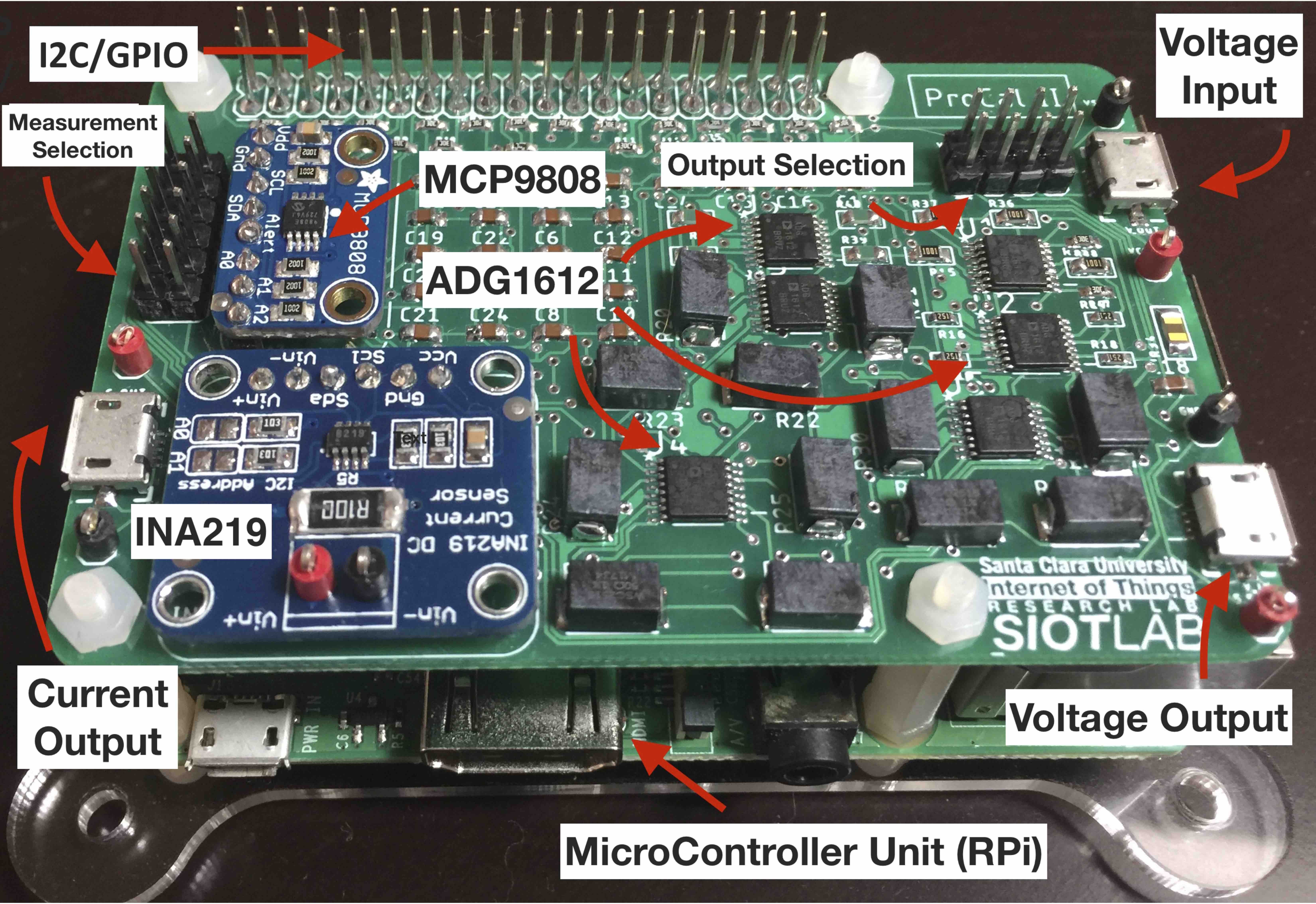

Tags: Sensing; Accuracy; Calibration; Low-cost; Range; Programmability

Excalibur: An Accurate, Scalable, and Low-Cost Calibration Tool for Sensing Devices

Calibration of an analog-to-digital converter is an essential step to compensate for static errors and ensure accurate digital output. In addition, ad-hoc deployments and operations require fault-tolerant IoT devices capable of adapting to unpredictable environments. In this paper, we present the design and implementation of Excalibur – a low-cost, accurate, and scalable calibration tool. Excalibur is a programmable platform, which provides linear current output and rational function voltage output with a dynamic range. The basic idea is to use a set of digital switches to connect with a parallel resistor network and program the digital switches to change the total resistance of the circuit. The total resistance and output frequency of Excalibur is controlled by a program communicating through the GPIO and I2C interfaces. The software provides two salient features to improve accuracy and reliability: time synchronization and self-calibration. Furthermore, Excalibur is equipped with a temperature sensor to measure the temperature before calibration, and a current sensor which enables current calibration without using a digital multimeter. We present the mathematical model and a solution to compensate for thermal and wire resistance effects and validate scalability by incorporating the concept of the Fibonacci sequence. Our extensive experimental studies show that Excalibur can significantly improve measurement accuracy.

We extend our gratitude to the following companies and organizations for their generous funding,

the donation of essential equipment, and/or invaluable technical support

Copyright 2024 | Behnam Dezfouli